Censorship-Resistant Indexing and Search for Web3

This blog post has been co-written by Georgy Ishmaev. This work is funded by the Ethereum Foundation.

Depending upon who you ask, Web3 is experiencing explosive growth or still has to live up to the expectations. But either way, the decentralized apps we have now already have real users. And with these users come difficult questions of usability and, yes, inevitable design compromises sacrificing something to get this usability now. Sometimes these compromises seem to contradict the very ideals of Web3, such as decentralization, privacy, and censorship. This is almost a philosophical question. But if it is the same as Web2 on these metrics, we might as well stop trying to make it. Thankfully, there seems to be some mild social consensus and acknowledgment of this thesis among parts of the blockchain community, such as the Ethereum ecosystem.

In no small degree, this acknowledgment was stimulated by sad and disturbing developments around Tornado Cash (TC) last year, and that reverberated through the community. Consequently, the situation around TC transactions censorship by flash bots relays that, for a brief moment, became a centralized bottleneck, reminding everyone why this may not be the best idea to create critical dependencies on a trusted party in a permissionless environment.

So for Web3 (or whatever alternative to the current web) to have a winning chance, we must work consistently towards decentralization on different layers and across a broad range of use cases. Layer 1 solutions such as Ethereum indicate a map showing not only problematic points of the decentralization process but also positive examples of how we can progress on this process. For example, comparing the progress in permissionless consensus algorithms with decentralized storage layer solutions, we can see that the latter needs to catch up. Imagine if we get all kinds of decentralized apps, only for them to store user data on AWS; not fun at all. Hopefully, we will eventually get to the point where we have not only permissionless, trustless Dapps execution but also permissionless, trustless data storage. Imagine, it is already almost there. Great, now we can enjoy it all: “Just need to search for the address of this new Dapp in Google.”

Wait a minute; this is not how it was supposed to be at all! So yes, we need to consider not just the decentralization of different layers but also the decentralization of enabling services on the application level, which will be critical for the usability of Web3. Search engines and indexers are essential applications for the web now and likely will remain as such in the coming future. But if the current state of affairs is any indication, we are already becoming dependent on centralized blockchain explorers to search addresses, transactions or sometimes to check your asset portfolio. While some say this is not a big deal (as frontends can always be replaced), the problem runs deeper. Proper chain indexing is a serious resource-heavy business that, at the moment, tends to favor centralized providers. Even in the best-case scenario, these providers will still be vulnerable to legal pressure. And in the much worse scenario, indexing will be done by dubious chain analytics companies who already peddle their services to De-Fi frontends under the label of “digital asset compliance & risk management.”

So having a functioning decentralized search and indexing solution for Web3 is not an option but a critical necessity. The good thing is that we already see some components of these critical architectures being deployed and tested. Honorable mention goes to projects like Trueblocks, the Rotki app, and other solutions based on local-first principles. Indexing solutions, such as The Graph, try to experiment with token-based business models. However, it is safe to say that the design space in Web3 search and indexing solutions is far from being fully explored. A research and design challenge is to develop modular and interoperable solutions comprising a scalable decentralized indexing and search engine that can run in a peer-to-peer (p2p) network.

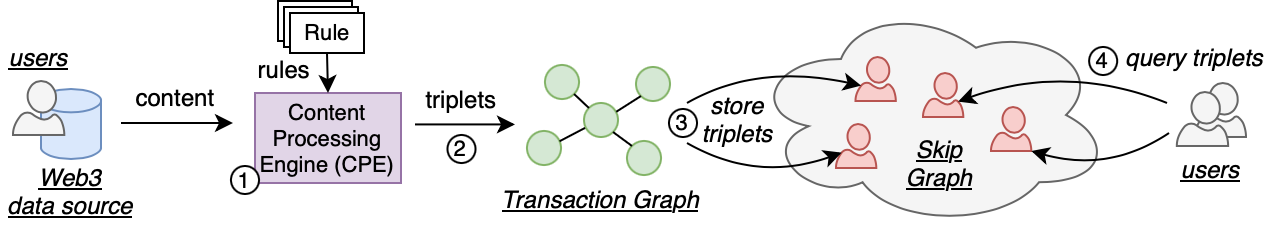

In a recent article, we introduce DeScan, a peer-to-peer system for transaction indexing and searching in Web3. The scheme below shows its functioning.

Some interesting research questions immediately pop up here. Firstly, it is decentralized, but it does not mean the system is censorship resistant. In peer-to-peer settings, we can still get adversarial nodes that will try deleting requests and delivering search results. For example, a node might deliberately withhold information. Secondly, we will get simply non-responsive nodes that will fail for different reasons; for example, their Internet connection is unstable. Thirdly, what kind of storage and bandwidth overhead will our system introduce? For our system to be usable, this functionality should be integrated or modularly compatible with existing blockchain clients, ideally, without the need to run your own archival node and without the need for constant requests to full nodes. Why is this so desirable? Well, the hardware cost of the archival node for Ethereum is over 14TB (at the moment of writing). While storage is getting cheaper, in the long run, we need more diversity than that. And if only a few full nodes have to service a vastly larger number of dependent light clients, we get a severe scalability bottleneck. So the critical component here is the choice of a decentralized data structure for indexes that can be implemented as a peer-to-peer solution.

The popular choice for a distributed data structure in a peer-to-peer network is a family of Distributed Hash Tables (DHTs). They have some compelling properties, for example, logarithmic message and time complexity for searching, but they also have certain limitations. A fundamental limitation is that standard DHTs do not allow making range queries. In Web3, data can sometimes be ordered; for example, market orders in a decentralized marketplace are sorted by price.

A somewhat less popular but interesting alternative here is a Skip Graph data structure with similar algorithmic complexities as a DHT and support for range queries. The Skip Graph is a distributed data structure based on Skip Lists and enables search in a decentralized overlay. It consists of one or more nodes where each node has a key and a membership vector. The membership vector is usually a random bit string. A node can represent a physical machine or a peer in a peer-to-peer network, but it can also be more granular and describe some data that a particular peer stores. The Skip Graph consists of multiple levels and the main idea is that nodes that have i common prefix bits in their membership vector keep a reference to each other on level i. When searching for a node with a particular key, the search request gets routed through the network starting at the highest level, working its way downwards to the lowest level. This takes logarithmic message and time complexity, similar to a DHT.

However, the naive implementation of a Skip Graph does not address the problem of adversarial and failing nodes. Still, would it be possible to use a Skip Graph for decentralized transaction indexing and search that is censorship-resistant and can be integrated with light clients? Our answer to that is yes. We can modify the Skip Graph to add redundancy and fault tolerance without introducing prohibitively high overhead. Fourth specific modifications to the original Skip Graph strucure can make it tolerant to unresponsive and adversarial nodes:

- Acknowledgments of Search messages

- Extended routing tables

- Replicating triplets on the storage layer

- Operating multiple Skip Graph

The feasibility of these modifications has been evaluated experimentally using a data set with Ethereum transactions. It shows that our modified Skip Graph can deal with up to 25% adversarial peers and 35% unresponsive peers without significant system degradation. Queries are completed well within a second, even with over 10000 peers. Furthermore, the network and storage overhead induced by individual peers decreases as the network grows, and workload distribution evens out. This means that DeScan, when implemented as a peer-to-peer overlay, can feasibly integrate with light clients that contribute a small amount of system resources.

Starting from here, we can consider more modules to enable richer functionality, crafting different rules for different types of Web3 content and content discovery mechanisms in the future. But this is already a topic for a separate blog post. If you want more technical details, please read our research paper, which presents additional explanations and experimental results. If you are interested in these ideas or would like to share yours feel free to contact us.

Enjoy Reading This Article?

Here are some more articles you might like to read next: